4 Ways to Improve Dashboard Performance

A common problem that data analysts and report users face is slow dashboards, making users wait for charts and reports to load. The dashboards may have been fast when initially built, but the performance and responsiveness deteriorates over time.

Outspoken users raise this is a concern for analysts and technology teams to address, but often times, many users simply stop using such applications. Sub-optimal responsiveness results in “White Elephants”: an application that required significant effort and investment to build, but has no users.

This article aims to articulate four different ways to improve report performance.

The Reporting Landscape

A dashboard or reporting application usually has many technical components working in tandem. When one takes an end-to-end view of a BI application, performance improvement can come from 4 different activities:

- Improving the underlying data model

- Optimizing the individual tables in the reporting database

- Caching the report data within a BI Tool

- Setting better Defaults for reports and dashboards

Improving the Data Model

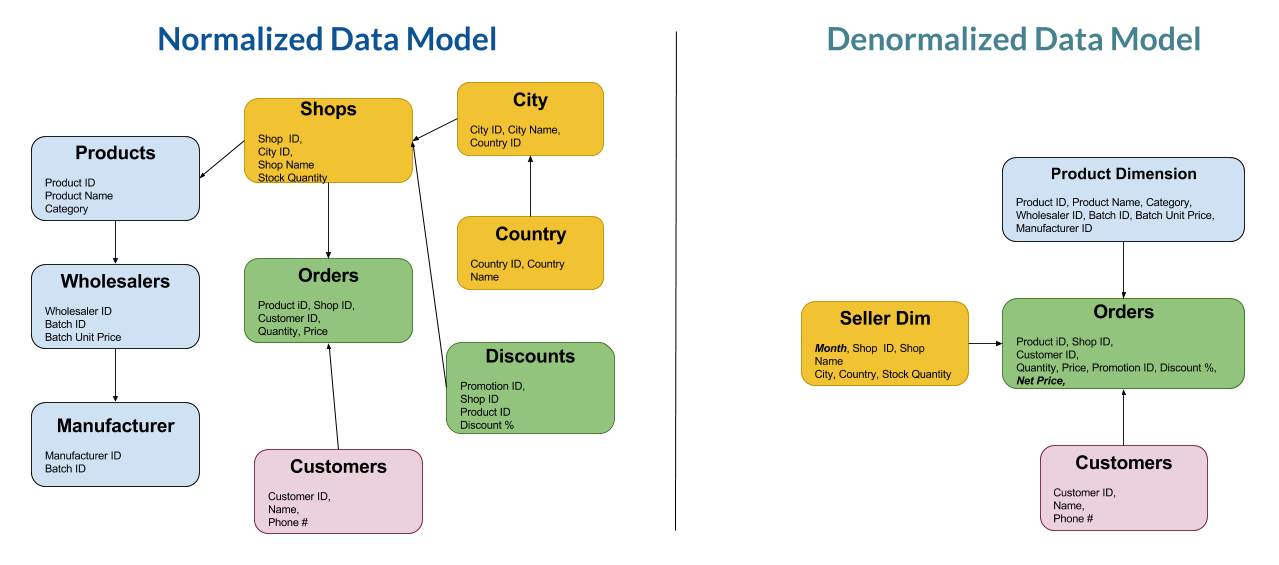

If reporting applications go against the OLTP or Production Database, the tables are likely to be “Normalized” or optimized for the performance of the product. Thus, in order to reduce data duplication and to improve efficient storage of information, data will be captured across multiple tables. However, when the same database is used for reporting, the dashboard has to traverse across so many tables to render the visualizations, slowing down the time to render.

Data Warehouses or Data Marts typically model data using a denormalized or “Flat” version of all the data points. The simplest way to understand how to model a table in a reporting context is to imagine a broad table containing many columns, encompassing data elements from several tables in the source. A commonly recommended best practice is to resolve IDs and other reference keys into readily usable, “English” Forms. An example is as below:

Thus, flattening tables ensures that the query fired by the dashboard goes against one table, fetching all the required elements in one shot.

The cost of implementing a better data model is the storage cost of additional tables and the duplication of source data. For instance, in the example above, the City Name and Country Name is repeated several times. If countries from which orders were created is identified by a numbered ID (1, 2, ..) in the source, the reporting table will have the full country name spelled out (United States of America, Singapore etc.). As tables are flattened, a lot of redundancy is created. While this is “wasteful”, the tremendous drop in storage costs makes this a relatively inexpensive way to achieve performance lifts.

Database Optimizations

There are two techniques to improve the speed with which results are returned by a database:

- Partitioning

- Indexing

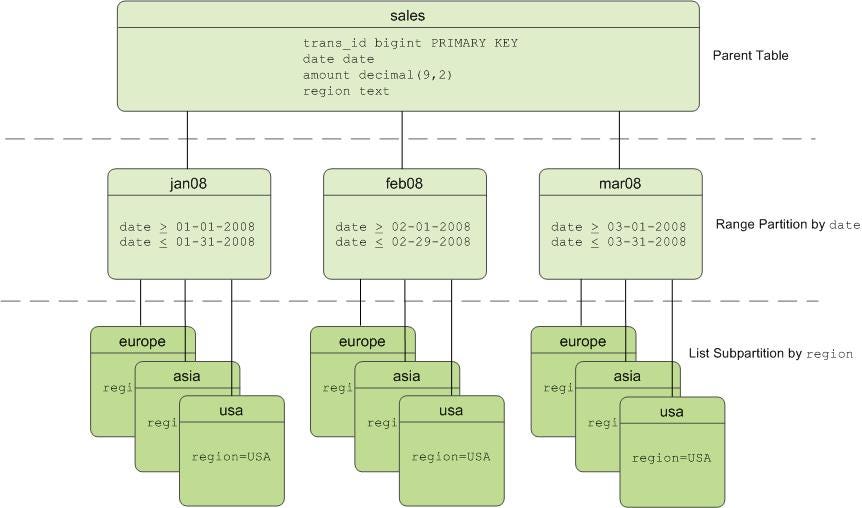

Partitioning is a setting which splits a large table (10–100M rows) into smaller portions based on a column value. Let’s take the example of a table tracking eCommerce orders or Page Visits on a website: This is likely to be a large table with several hundred million rows. Now, partitioning this table based on a date column will ensure that the table is split into as many parts as there are unique dates. Thus, the table is stored as a combination of hundreds of smaller sized tables, each containing a few hundred thousand rows. Thus, a query does not have to traverse through a very large data set, and only access the required and relevant subsets of the table. An example is as below:

Indexing is a method by which individual rows or a set of rows are provided an “address” that can be looked-up easily. An index can be based on one or more columns. When filters are applied on the columns that are part of the index, the query operation does not traverse through the entire table, but quickly gets to the set of data that is addressed by the value chosen in the filter.

The easiest way to understand an index is how you find books at a library. Books are grouped into sections of “Fiction” and “Non-Fiction”, and then into subject areas, such as “Business”, “Self-Help”, “Crime Thriller” etc. If you find yourself in the right section, books are ordered by alphabetical order of the author’s last name. Such a system helps you find a few books with the just two or three pieces of information. Indexes are the way to get to individual rows with the help of a combination of values held in a selected set of columns.

Indexing and Partitioning are, perhaps, the most powerful and scalable way to achieve performance, as they remain relatively resilient to large growth in data volumes. The cost of indexing is additional storage, as the index is stored as a “column” that is invisible to users. A well-optimized table may be 1.7x the size of the original version of the table.

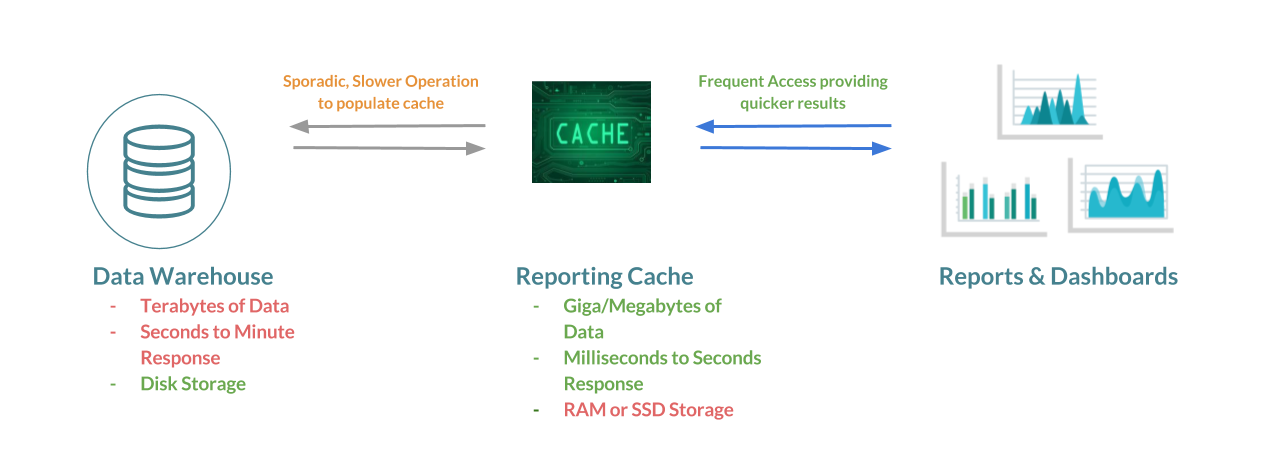

Caching Data

Several BI Reporting tools provide an option to cache the data set used by a dashboard. Users accessing the dashboard subsequently get the data from the cache, as opposed to re-executing the query against the database. Caching provides a significant performance gain in user experience, especially in dashboards accessed by multiple users. Report developers can also schedule the cache to be created and populated before office hours so that the dashboard performs well from the first usage onwards.

There are two things to consider before using the cache option:

Cost

BI vendors may charge customers for the volume of data each dashboard uses, as cache is hosted in high-performance storage clusters. Unlike database table storage, this may not be cheap, and will also scale based on the refresh frequency and usage patterns. Thus, leveraging a cache might be an expensive.

Security

The cache data will reside in a cluster hosted by the BI vendor and in some industries, such as Financial Services, this is prohibited by regulators. Moreover, if your application contains sensitive information, , such as Reporting on Customer Data, placing customer-identifying information in external servers could be of great concern. Caching automatically implies that your data ceases to be “on-premise”.

Defaults

The low-hanging fruit of tuning performance lies in how reports and dashboards are built: including a large number of filters and setting default values ensures that the first time the dashboard loads is fast. Report builders can set values that will intentionally access a smaller subset of the data.

This approach can be combined with the other approaches listed above, compounding the performance improvements. For instance, if you set filters on columns that partition a large table, you force the dashboard to access smaller sections of the underlying data set. Thus, building reports filtered on columns that are part of the index and having filters on columns that is the partition column will take advantage of the tuning done in the backend.

Conclusion

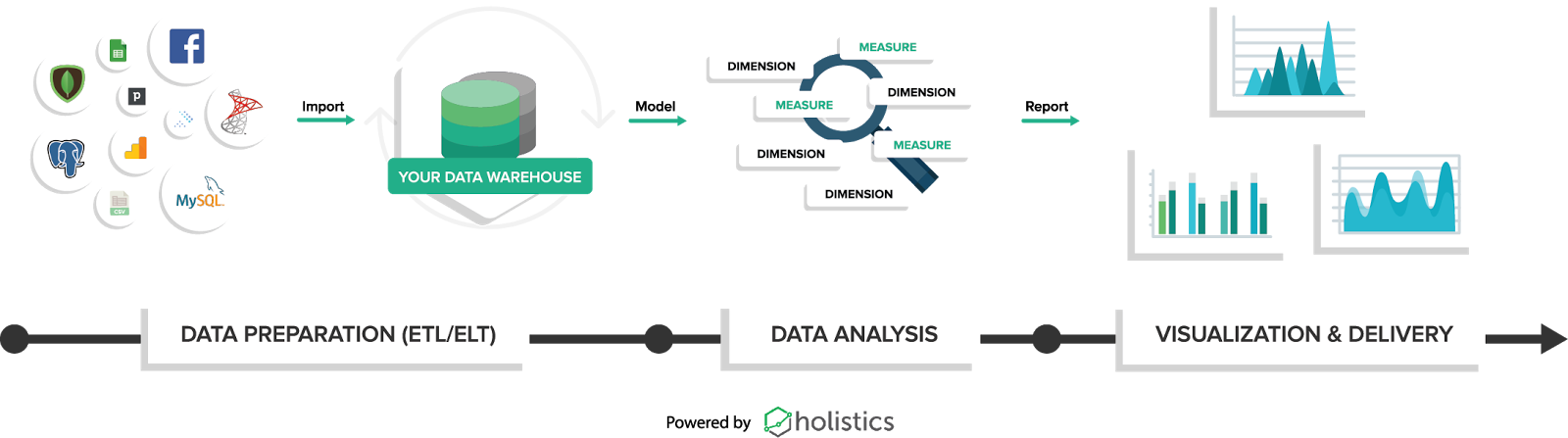

Often times, analysts and report builders only look at options within the BI Tool for performance improvements. Zooming out to look at the end-to-end BI workflow could help identify areas of improvement that make improvement sustainable. Moreover, improvements made through table or data model optimizations can benefit other reporting applications and ad-hoc queries as well. Since BI vendors and tools are focused on one aspect of the BI Ecosystem, such as Data Imports, Preparation, or Visualization, some of the steps outlined above may require more coordination and technical help.

What's happening in the BI world?

Join 30k+ people to get insights from BI practitioners around the globe. In your inbox. Every week. Learn more

No spam, ever. We respect your email privacy. Unsubscribe anytime.