How to Measure Software Development, from ‘Accelerate: The Science of Lean Software and DevOps’

Tracking the performance of your software development team is really, really difficult. The 2018 book Accelerate: The Science of Lean Software and DevOps gives us a fantastic way to measure just that. Here's how.

One of the most interesting ideas from the 2018 book Accelerate: The Science of Lean Software and DevOps is the notion of using only four key metrics to measure software delivery performance.

This idea is interesting for two reasons.

First, Accelerate was written as a result of a multi-year research project that studied the productivity of a broad set of software-producing orgaizations. Authors Nicole Forsgren, Jez Humble and Gene Kim compiled over 23,000 survey responses from over 2000 unique organizations: orgs that ranged from startups to non-profits; from manufacturing firms to financial services offices and from agriculture conglomerates to Fortune 500 companies. Accelerate, the book, is a summary of those four years of research, originally published as a set of papers in peer-reviewed journals across the world.

Second, Accelerate’s ideas are interesting because it is incredibly difficult to measure the output of a software engineering organization. Anyone who has given serious thought to this problem has probably come across the ‘measuring the unmeasurable’ objection. It goes something like this: you are the VP of software engineering. You propose some metric to measure the productivity of your team — say, for instance, you think number of commits is a good way to measure output, or you propose a simple ‘lines of code’ or LOC count.

Your team immediately revolts: “But number of commits is a stupid metric!” they say, “If you want to measure commit quantity, bad programmers will just split their code into as many small commits as possible! And LOC is even stupider! Good programmers prefer a solution that is 10 lines long, instead of 1000! And what about code quality? If a programmer has a high number of commits but the quality of each commit sucks, then we’re going to have to circle back to fix things later! Code quality is unmeasurable!”

“Alright,” you say, “Then how about number of tasks completed?”

“Stupid! Some tasks are more important than others!” they respond.

“Alright,” you say, “We could award points to each story, and then measure team output by cumulative points after a week …”

“Ugh!” your team says, “Do you think we’re going to do anything apart from hitting our story point quotas? If the deployment team has a weekly point quota to hit, and we have a weekly point quota to hit, do you think we’re going to spend our time helping people outside the team?” and someone shouts out, from the back: “Code quality is unmeasurable!”

You eventually give up.

Accelerate proposes that software development output can be measured. They say this after spending four years looking into the practices of many software-producing organizations. You just have to know how to look.

The Four Measure of Software Delivery Performance

How do you measure the performance of your software team?

The authors settle on four measures, adapted from the principles of lean manufacturing. Each of these measures can be seen as a counter-balance to one other metric; this is a direct application of the Principle of Pairing Indicators.

The four metrics are as follows:

- Lead Time — the average amount of time it takes from the time code is checked in to the version control system to the point in time where it is deployed to production.

- Deployment Frequency — the number of times deploys to production occur in a time period.

- Mean Time to Restore — how long it takes to resolve or rollback an error in production.

- Change Fail Percentage — what percentage of changes to production (software releases and configuration changes) fail.

The first two metrics are tempo metrics — that is, a measure of speed or throughput in classical lean manufacturing terminology. The latter two metrics are stability or reliability metrics. The stability metrics counter-balance against the tempo metrics, to guard against an overzealous pursuit of speed over quality.

Let’s take a closer look at each of these metrics, in order.

Lead Time

In lean manufacturing, lead time is the amount of time it takes to go from a customer making a request to the request being satisfied. This is problematic when adapted to software for two reasons.

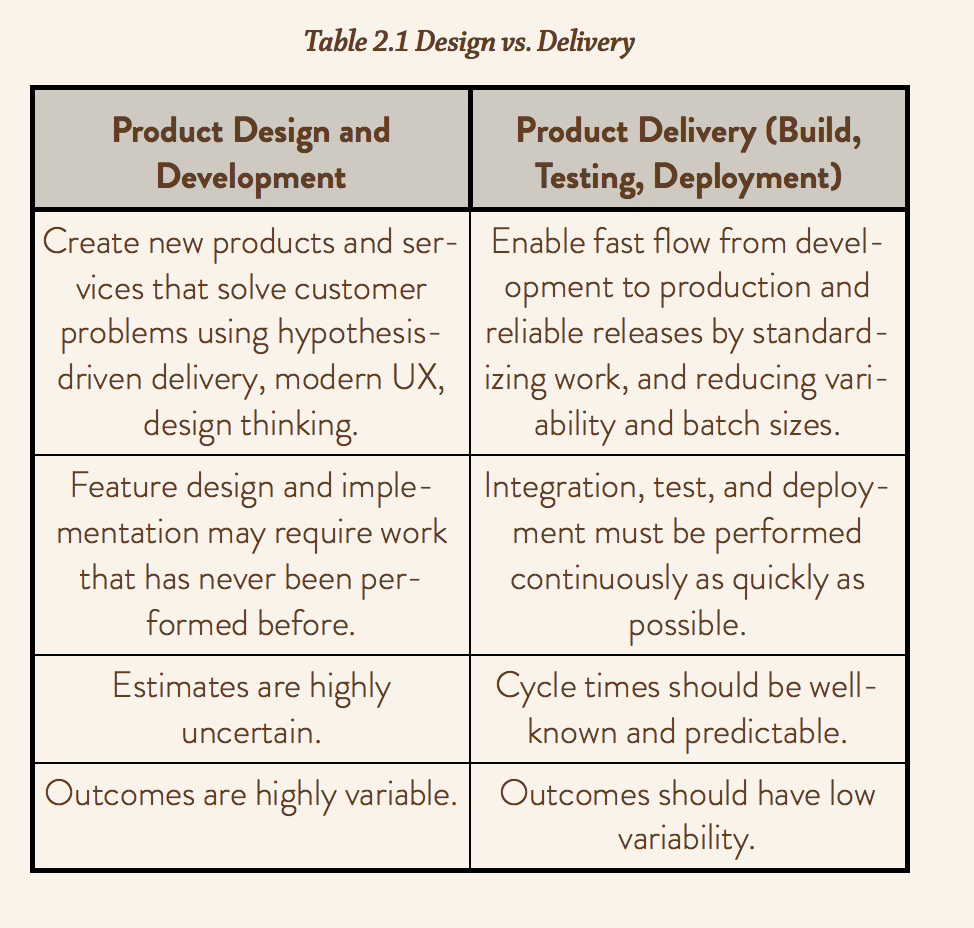

First, software isn’t like manufacturing — designing a new feature and implementing it is often a highly uncertain activity. Programmers might need to use new tools, or apply techniques they’ve never used before; product managers realise the specifications are trickier than expected mid-cycle. It is incredibly difficult to predict when a feature request can get fulfilled.

Second, the outcome of a new feature request can be highly variable. Sometimes, a delivered feature works as advertised, and fulfils the customer’s needs. But more often than not, a feature lands flat on its face, or uncovers further problems to be fixed.

As a result, the authors of Accelerate sidestep this problem by measuring the delivery of the feature, not its development. This move is informed by research that shows software development is roughly divided into two domains: a highly uncertain, highly variable product design phase in the beginning, and then a low uncertainty, low variability product delivery phase at the end of a cycle.

(This split-domain lens is quite intuitive; the people at Basecamp, for instance, call this ‘before the hump’ and ‘after the hump’ forms of work.)

As a result, lead time gets transformed into the following question: how quickly does code go from an accepted commit to code running in production? Measuring this provides an indicator of software engineering speed.

Deployment Frequency

In classical lean manufacturing, batch size is considered one of the most important levers for the success of a lean manufacturing system. It is, in fact, considered key to the implementation of the Toyota Manufacturing System. The authors cite Donald Reinertsen, in his 2009 book The Principles of Product Development Flow:

Reducing batch sizes reduces cycle times and variability in flow, accelerates feedback, reduces risk and overhead, improves efficiency, increases motivation and urgency, and reduces costs and schedule growth.

This seems just as true when applied to software, but the problem with measuring batch size in the context of a software development process is that there isn’t any visible inventory to observe.

As a result, the authors decided on deployment frequency as an alternative measure to batch size. The intuition here is that software development teams who release software intermittently would have larger ‘batch sizes’, in the sense that they would have more code changes and more commits to test. On the other end of the spectrum, software teams that practice continuous, on-demand deployments typically work with very small batch sizes.

Mean Time to Restore

MTTR is a really simple metric to understand. In the past, software teams used time between failures as a measure of software reliability. But today, with the rise of complex cloud-oriented systems, software failure is seen as inevitable. What matters, then, is how quickly teams can recover from software failure. How many hours before services can be restored? What is the average downtime?

This should not be surprising to most of us — after all, uptime is baked into most enterprise software SLAs. Accelerate’s authors merely take that and use it as a core measure of stability.

Change Fail Percentage

The final metric that Accelerate looks at is change fail percentage — that is, the proportion of changes to production that result in a hotfix or rollback or a period of degraded performance. Naturally, this includes software releases and configuration changes, since both are common causes of software failure.

In the context of Lean, this metric is the equivalent of ‘percent complete and accurate’, commonly used in a generic product delivery process. This is common sense, and consistent with what we understand of manufacturing companies: you don’t want to increase production throughput in a factory at the expense of quality control.

No Tradeoffs Between Speed and Stability

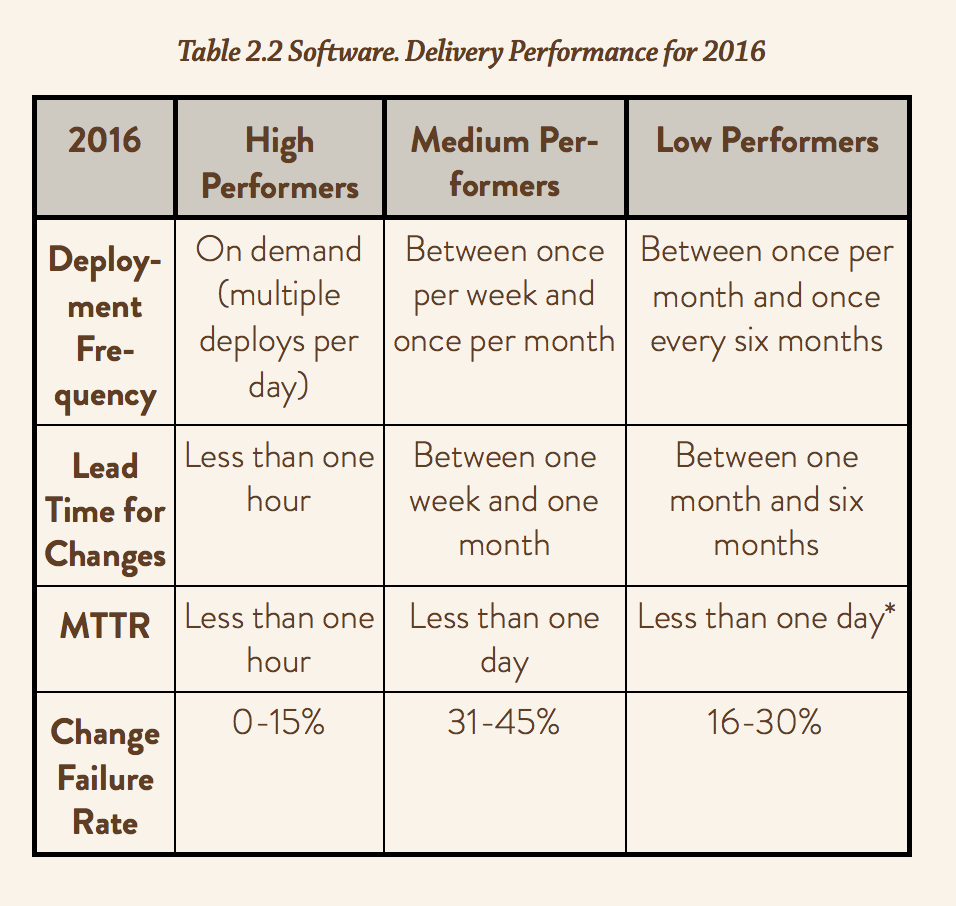

The most counter-intuitive result of this research is the authors’s discovery that companies who did well on speed also did well on stability. The authors then conclude that this finding implies no tradeoff between the two!

This runs counter to our intuitions that optimizing for tempo trades against optimizing for software reliability. More concretely, the authors based their conclusions on the finding that good software organizations seem to do good on both measures, that bad software organizations consistently perform at the bottom, and mediocre orgs hang around in the middle — and do so on both metrics at every level of quality! Nowhere in their data set did they find companies that did excessively better at one metric at the expense of the other.

There is an argument here, of course, that the best practices are so unevenly distributed that software companies should just copy the best process and tools in the pursuit of such improvements — and that it isn’t until they hit the top levels of performance that a tradeoff between speed and stability appears. But I think this is largely an academic concern; we should feel relief that such high performance is even possible on both fronts. Accelerate’s findings tell us that high performance in one measure enables high performance in the other, and we can pursue both from a cold start.

So how do these organizations do it? The book goes into further detail over the course of three chapters. It also explains how you might drive change in your own company. I won't go into the details, but the short of it is:

- Implement continuous integration and continuous delivery (CI/CD) pipelines — which is a bit of a catch-all recommendation for a bunch of best practices. These include adjacent things like:

- Use version control — you can’t rollback easily without version control.

- Have test automation — with a test suite that is reliable.

- Test data management — manage the data that your tests run on.

- Trunk based development — the majority of companies that are high performers work on trunk-based development — that is, that work directly on master, and have few if any long-lived feature branches.

- Implement information security as part of the CI/CD process — high performing software organizations incorporate information security into every step of the software development cycle, in a way that did not slow down delivery.

And, finally, the authors caution that the most important aspect of driving change is to:

- Change your organizational culture. In a healthy culture the right metrics become drivers for positive change. In a toxic culture, metrics become tools for control. It is important to work on culture first, before working on process changes.

(This is an implicit recommendation to read the book; we can’t possibly cover the entirety of this topic in a blog post).

How to Measure These Four Metrics

How do you measure the four metrics that Accelerate proposes?

This isn’t very difficult. Each metric is well-defined, and you could build a dashboard, powered by a shared Google Sheets to track the performance of your entire software engineering organization.

For instance, you could:

- Write a script that intercepts deploys and calculates the average time it takes for a commit to hit production, based off the timestamps in the commits related to the new release.

- Manually record the number of deploys at the end of each week.

- Write a script that periodically polls and then calculates mean time to restore from your status page (assuming you have one).

- Manually enter the % of deploys that cause errors (downtime, rollbacks, degraded performance) at the end of each week.

If this Google Sheets spreadsheet is connected to a Business Intelligence tool like Holistics, you could easily generate a dashboard for the rest of your organization.

Otherwise, exporting to CSV and running it in visualisation tools like Tableau are also a workable option.

Accelerate is a great book, and it should give all of us in software organizations some hope. We now know that it is possible to deliver software both rapidly and safely — and we know the four measures necessary to get at that goal. Read the book, it’s good.

What's happening in the BI world?

Join 30k+ people to get insights from BI practitioners around the globe. In your inbox. Every week. Learn more

No spam, ever. We respect your email privacy. Unsubscribe anytime.